| .github | ||

| .vscode | ||

| tests/pdf | ||

| web-app | ||

| .dockerignore | ||

| .gitignore | ||

| app_http_handlers.go | ||

| app_llm.go | ||

| CODE_OF_CONDUCT.md | ||

| CONTRIBUTING.md | ||

| demo.mp4 | ||

| docker-build-and-push.sh | ||

| docker-compose.yml | ||

| Dockerfile | ||

| go.mod | ||

| go.sum | ||

| jobs.go | ||

| LICENSE | ||

| local_db.go | ||

| main.go | ||

| ocr.go | ||

| paperless-gpt-screenshot.png | ||

| paperless.go | ||

| paperless_test.go | ||

| README.md | ||

| renovate.json | ||

| types.go | ||

paperless-gpt

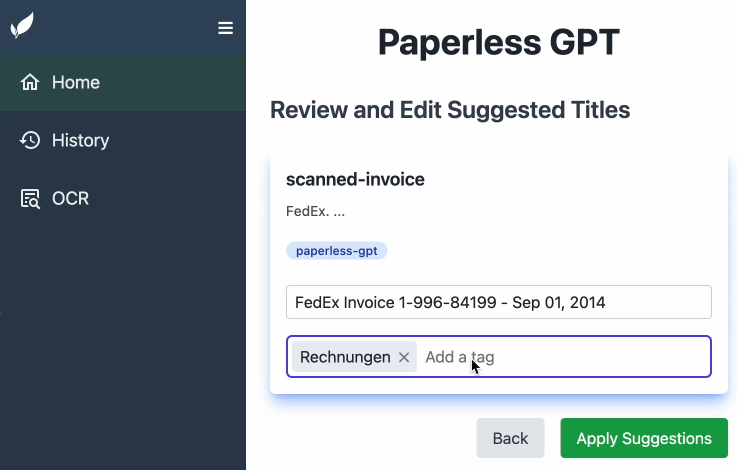

paperless-gpt seamlessly pairs with paperless-ngx to generate AI-powered document titles and tags, saving you hours of manual sorting. While other tools may offer AI chat features, paperless-gpt stands out by supercharging OCR with LLMs—ensuring high accuracy, even with tricky scans. If you’re craving next-level text extraction and effortless document organization, this is your solution.

Key Highlights

-

LLM-Enhanced OCR

Harness Large Language Models (OpenAI or Ollama) for better-than-traditional OCR—turn messy or low-quality scans into context-aware, high-fidelity text. -

Automatic Title & Tag Generation

No more guesswork. Let the AI do the naming and categorizing. You can easily review suggestions and refine them if needed. -

Extensive Customization

- Prompt Templates: Tweak your AI prompts to reflect your domain, style, or preference.

- Tagging: Decide how documents get tagged—manually, automatically, or via OCR-based flows.

-

Simple Docker Deployment

A few environment variables, and you’re off! Compose it alongside paperless-ngx with minimal fuss. -

Unified Web UI

- Manual Review: Approve or tweak AI’s suggestions.

- Auto Processing: Focus only on edge cases while the rest is sorted for you.

-

Opt-In LLM-based OCR

If you opt in, your images get read by a Vision LLM, pushing boundaries beyond standard OCR tools.

Table of Contents

Getting Started

Prerequisites

- Docker installed.

- A running instance of paperless-ngx.

- Access to an LLM provider:

- OpenAI: An API key with models like

gpt-4oorgpt-3.5-turbo. - Ollama: A running Ollama server with models like

llama2.

- OpenAI: An API key with models like

Installation

Docker Compose

Here’s an example docker-compose.yml to spin up paperless-gpt alongside paperless-ngx:

version: '3.7'

services:

paperless-ngx:

image: ghcr.io/paperless-ngx/paperless-ngx:latest

# ... (your existing paperless-ngx config)

paperless-gpt:

image: icereed/paperless-gpt:latest

environment:

PAPERLESS_BASE_URL: 'http://paperless-ngx:8000'

PAPERLESS_API_TOKEN: 'your_paperless_api_token'

PAPERLESS_PUBLIC_URL: 'http://paperless.mydomain.com' # Optional

MANUAL_TAG: 'paperless-gpt' # Optional, default: paperless-gpt

AUTO_TAG: 'paperless-gpt-auto' # Optional, default: paperless-gpt-auto

LLM_PROVIDER: 'openai' # or 'ollama'

LLM_MODEL: 'gpt-4o' # or 'llama2'

OPENAI_API_KEY: 'your_openai_api_key'

LLM_LANGUAGE: 'English' # Optional, default: English

OLLAMA_HOST: 'http://host.docker.internal:11434' # If using Ollama

VISION_LLM_PROVIDER: 'ollama' # (for OCR) - openai or ollama

VISION_LLM_MODEL: 'minicpm-v' # (for OCR) - minicpm-v (ollama example), gpt-4o (for openai), etc.

AUTO_OCR_TAG: 'paperless-gpt-ocr-auto' # Optional, default: paperless-gpt-ocr-auto

LOG_LEVEL: 'info' # Optional: debug, warn, error

volumes:

- ./prompts:/app/prompts # Mount the prompts directory

ports:

- '8080:8080'

depends_on:

- paperless-ngx

Pro Tip: Replace placeholders with real values and read the logs if something looks off.

Manual Setup

- Clone the Repository

git clone https://github.com/icereed/paperless-gpt.git cd paperless-gpt - Create a

promptsDirectorymkdir prompts - Build the Docker Image

docker build -t paperless-gpt . - Run the Container

docker run -d \ -e PAPERLESS_BASE_URL='http://your_paperless_ngx_url' \ -e PAPERLESS_API_TOKEN='your_paperless_api_token' \ -e LLM_PROVIDER='openai' \ -e LLM_MODEL='gpt-4o' \ -e OPENAI_API_KEY='your_openai_api_key' \ -e LLM_LANGUAGE='English' \ -e VISION_LLM_PROVIDER='ollama' \ -e VISION_LLM_MODEL='minicpm-v' \ -e LOG_LEVEL='info' \ -v $(pwd)/prompts:/app/prompts \ -p 8080:8080 \ paperless-gpt

Configuration

Environment Variables

| Variable | Description | Required |

|---|---|---|

PAPERLESS_BASE_URL |

URL of your paperless-ngx instance (e.g. http://paperless-ngx:8000). |

Yes |

PAPERLESS_API_TOKEN |

API token for paperless-ngx. Generate one in paperless-ngx admin. | Yes |

PAPERLESS_PUBLIC_URL |

Public URL for Paperless (if different from PAPERLESS_BASE_URL). |

No |

MANUAL_TAG |

Tag for manual processing. Default: paperless-gpt. |

No |

AUTO_TAG |

Tag for auto processing. Default: paperless-gpt-auto. |

No |

LLM_PROVIDER |

AI backend (openai or ollama). |

Yes |

LLM_MODEL |

AI model name, e.g. gpt-4o, gpt-3.5-turbo, llama2. |

Yes |

OPENAI_API_KEY |

OpenAI API key (required if using OpenAI). | Cond. |

LLM_LANGUAGE |

Likely language for documents (e.g. English). Default: English. |

No |

OLLAMA_HOST |

Ollama server URL (e.g. http://host.docker.internal:11434). |

No |

VISION_LLM_PROVIDER |

AI backend for OCR (openai or ollama). |

No |

VISION_LLM_MODEL |

Model name for OCR (e.g. minicpm-v). |

No |

AUTO_OCR_TAG |

Tag for automatically processing docs with OCR. Default: paperless-gpt-ocr-auto. |

No |

LOG_LEVEL |

Application log level (info, debug, warn, error). Default: info. |

No |

LISTEN_INTERFACE |

Network interface to listen on. Default: :8080. |

No |

WEBUI_PATH |

Path for static content. Default: ./web-app/dist. |

No |

AUTO_GENERATE_TITLE |

Generate titles automatically if paperless-gpt-auto is used. Default: true. |

No |

AUTO_GENERATE_TAGS |

Generate tags automatically if paperless-gpt-auto is used. Default: true. |

No |

Custom Prompt Templates

paperless-gpt’s flexible prompt templates let you shape how AI responds:

title_prompt.tmpl: For document titles.tag_prompt.tmpl: For tagging logic.ocr_prompt.tmpl: For LLM OCR.

Mount them into your container via:

volumes:

- ./prompts:/app/prompts

Then tweak at will—paperless-gpt reloads them automatically on startup!

Usage

-

Tag Documents

- Add

paperless-gptor your custom tag to the docs you want to AI-ify.

- Add

-

Visit Web UI

- Go to

http://localhost:8080(or your host) in your browser.

- Go to

-

Generate & Apply Suggestions

- Click “Generate Suggestions” to see AI-proposed titles/tags.

- Approve, edit, or discard. Hit “Apply” to finalize in paperless-ngx.

-

Try LLM-Based OCR (Experimental)

- If you enabled

VISION_LLM_PROVIDERandVISION_LLM_MODEL, let AI-based OCR read your scanned PDFs. - Tag those documents with

paperless-gpt-ocr-auto(or your customAUTO_OCR_TAG).

- If you enabled

Tip: The entire pipeline can be fully automated if you prefer minimal manual intervention.

Contributing

Pull requests and issues are welcome!

- Fork the repo

- Create a branch (

feature/my-awesome-update) - Commit changes (

git commit -m "Improve X") - Open a PR

Check out our contributing guidelines for details.

License

paperless-gpt is licensed under the MIT License. Feel free to adapt and share!

Star History

Disclaimer

This project is not officially affiliated with paperless-ngx. Use at your own risk.

paperless-gpt: The LLM-based companion your doc management has been waiting for. Enjoy effortless, intelligent document titles, tags, and next-level OCR.